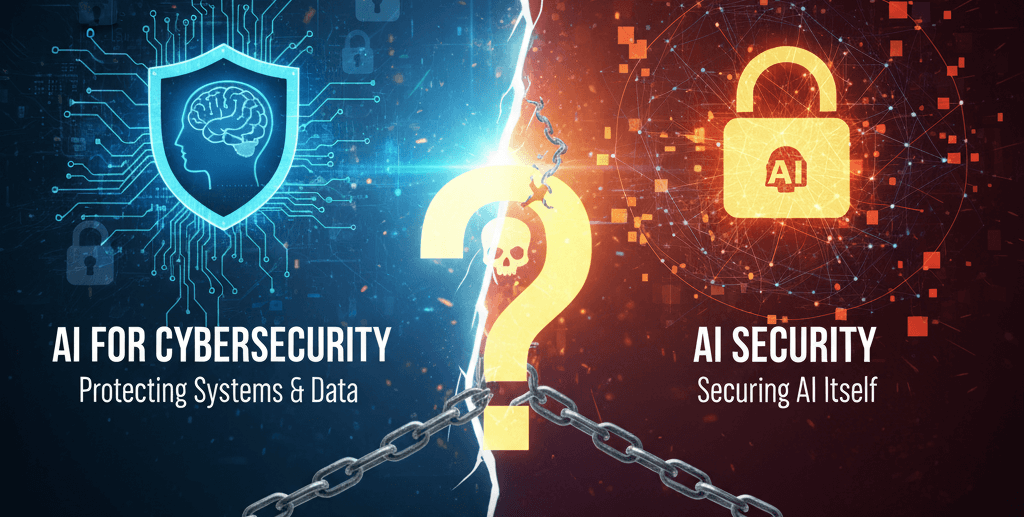

Demystifying AI for Cybersecurity vs. AI Security

The rise of Artificial Intelligence has brought about a dual transformation in the realm of digital protection. On one hand, AI offers unprecedented power to defend against ever-evolving cyber threats. On the other, AI itself presents a new attack surface and a unique set of security challenges. This often leads to confusion between two critical, yet distinct, concepts: AI for Cybersecurity and AI Security. Both are vital for a robust digital defense strategy and understanding their differences is paramount for effective implementation and risk mitigation.

AI for cybersecurity

AI for Cybersecurity refers to the application of AI and machine learning (ML) technologies to enhance an organisation’s defensive capabilities against cyberattacks. In essence, AI acts as a powerful tool to augment traditional security measures, often performing tasks that are too complex, voluminous, or rapid for human analysts alone.

Key applications and benefits:

- Threat detection and anomaly recognition: AI excels at processing vast amounts of data (network traffic, endpoint logs, user behavior) to identify subtle patterns and anomalies indicative of malicious activity. This includes detecting zero-day attacks, sophisticated malware, and insider threats that might bypass signature-based systems.

- Automated incident response: AI can automate parts of the incident response process, such as isolating infected systems, blocking malicious IP addresses, or triggering alerts, significantly reducing response times and minimising damage.

- Vulnerability Management: AI can analyse code, configurations, and system architectures to identify potential vulnerabilities more efficiently than manual methods.

- Predictive security analytics: By analysing historical threat data, AI can predict potential future attack vectors and help organisations proactively strengthen their defenses.

- Phishing and spam detection: AI algorithms are highly effective at identifying and filtering out malicious emails and messages by analysing content, sender reputation, and behavioral cues.

Example: An AI-powered Security Information and Event Management (SIEM) system might detect an unusual login attempt from a geographically improbable location followed by a large data transfer, correlating these disparate events into a high-priority incident that a human analyst might miss.

AI security, protecting AI systems

AI Security, in contrast, focuses on protecting the integrity, confidentiality, and availability of the AI systems and models themselves. As AI becomes integrated into critical business processes, securing these systems from malicious manipulation, data leakage, and unintended behavior is paramount. It’s about ensuring the AI acts as intended, without being compromised or turned against its users.

Key challenges and considerations:

- Data poisoning attacks: Malicious actors can subtly introduce corrupted or biased data into an AI model’s training dataset, causing the model to learn incorrect patterns and make faulty predictions or classifications.

- Adversarial attacks: These involve crafting carefully designed, imperceptible inputs that trick an AI model into misclassifying data. For example, slight modifications to an image might cause an object recognition AI to incorrectly identify it.

- Model evasion: Attackers can learn how an AI model makes decisions and then create inputs that bypass its detection mechanisms while still achieving their malicious goals.

- Model theft/extraction: Attackers may attempt to reverse-engineer or steal proprietary AI models, potentially gaining access to sensitive algorithms or data the model was trained on.

- Data privacy concerns: AI models are often trained on vast amounts of data, which can include sensitive personal or corporate information. Ensuring this data is not leaked or misused during training or inference is a critical security concern.

- Bias and fairness: While not strictly a “security” threat in the traditional sense, inherent biases in AI models can lead to discriminatory outcomes or create vulnerabilities that attackers could exploit.

- AI explainability (XAI): Understanding how an AI model arrives at its conclusions is crucial for debugging security issues, identifying vulnerabilities, and building trust.

Example: A financial institution uses an AI model to detect fraudulent transactions. An attacker might use data poisoning to subtly alter the model’s understanding of “fraud,” allowing legitimate fraudulent transactions to pass undetected. Alternatively, an adversarial attack could create a transaction that looks normal to human eyes but is specifically designed to bypass the AI’s detection algorithms.

Why AI for cybersecurity and AI security are essential

While distinct, AI for Cybersecurity and AI Security are increasingly interdependent.

- An organisation leveraging AI for cybersecurity must also ensure the security of those AI-powered tools. If the AI threat detection system itself is compromised through a data poisoning attack, its ability to protect the network is severely undermined.

- Conversely, robust AI security practices contribute to a stronger overall cybersecurity posture. By protecting your AI assets, you prevent them from becoming new vectors for attack against your broader infrastructure.

In conclusion, as AI continues to permeate every facet of business operations, a comprehensive security strategy must address both fronts. Organisations must harness the power of AI to defend their digital assets while simultaneously implementing stringent measures to protect their AI systems from emerging threats. This dual approach is not merely a best practice; it is a fundamental requirement for navigating the complex and evolving cybersecurity landscape of the AI era.

Navigating this complex landscape needs more than just tools; it requires a strategic partner with deep expertise. Mondas offers a comprehensive approach to securing your digital environment, leveraging a wide range of powerful AI for cybersecurity tools from leading vendors such as SentinelOne (endpoint protection), Tenable (exposure and vulnerability management), and Horizon3.ai (autonomous penetration testing).

With a dedicated team of seasoned professionals, Mondas helps organisations understand their unique risks and create a clear path forward. We build a robust Artificial Intelligence Management System (AIMS) to ensure responsible and secure AI deployment and can support organisations on their path to ISO 42001 certification.