Shadow AI is the term applied to describe employees using artificial intelligence tools and apps without any cyber governance. Similar to Shadow IT, Shadow AI could be the use of Copilot, Google Gemini, Deepseek, ChatGPT and other LLMs or AI tools outside the protective network the IT or security teams are aware of.

Artificial Intelligence (AI) has brought opportunities for innovation and efficiency, but there is growing concern around Shadow AI. This phenomenon, often stemming from the best intentions of employees, presents a unique and complex challenge to cybersecurity frameworks.

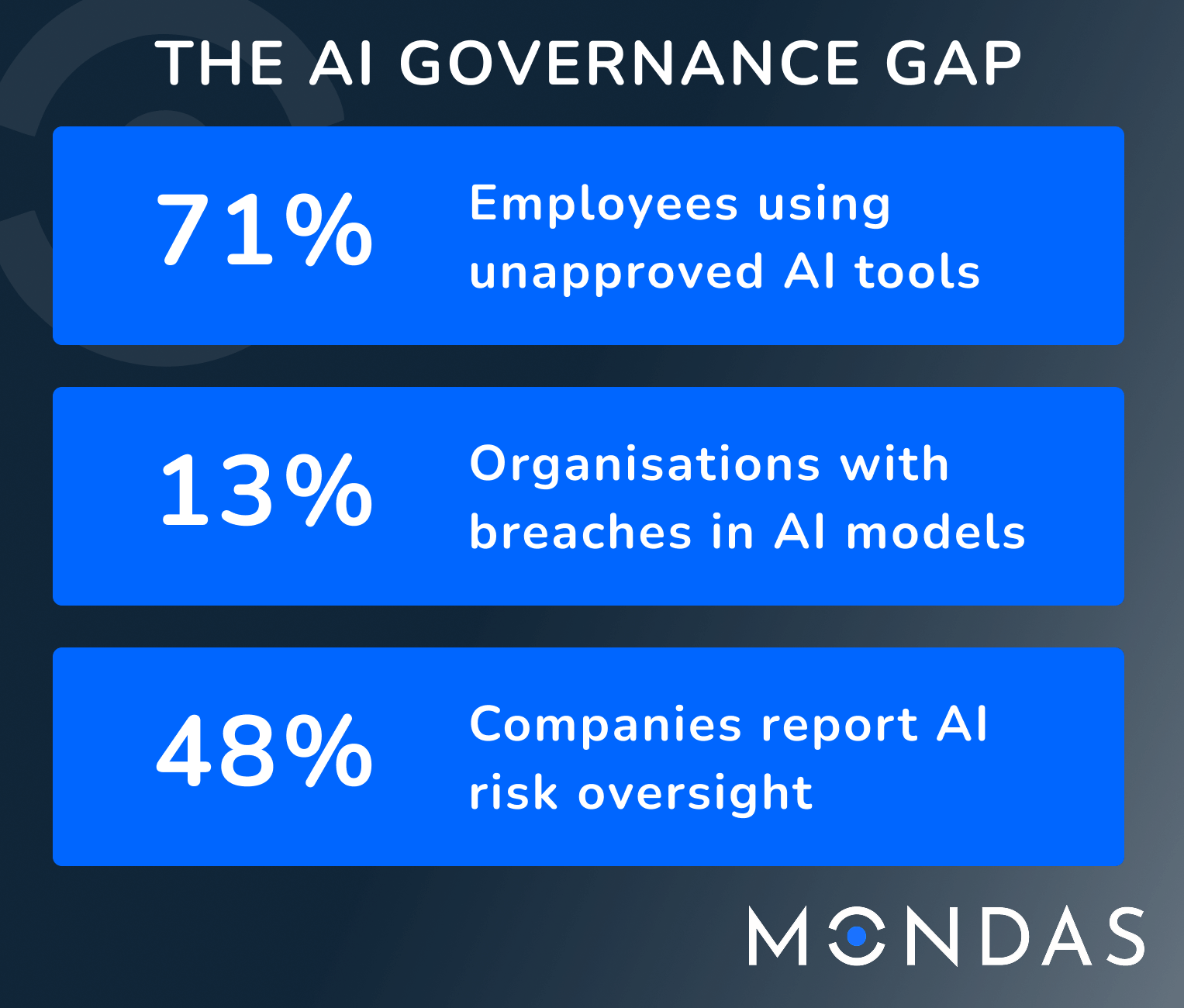

Recent research from Microsoft revealed that 71% of UK employees are using unapproved and unsecured AI tools at work, with 51% doing so weekly. 🔗View the full report.

The alarming statistic is that only a third of those surveyed said they had any concern about privacy or data input into AI tools. The issue is further compounded in alarm by data from IBM who earlier this year reported that 13% of organisations reviewed had reported a breach within their AI models or apps and of these 97% reported not having AI access controls in place. 🔗Read their update.

Tenable’s report The State of Cloud and AI Security 2025 highlights that 48% of companies report AI risk as an enterprise risk oversight, three times that reported in 2024, 🔗read more.

Shadow AI and the risks it poses

Generative AI (GenAI) tools, with their ability to quickly draft content, summarise information, or analyse data, offer a compelling solution. The problem arises when these tools are adopted outside of an organisation’s approved IT infrastructure and security protocols.

Imagine an employee facing a tight deadline, using a public GenAI tool to quickly rephrase a sensitive internal document or analyse customer data. While their intent is to improve their work, they might unknowingly be feeding proprietary, sensitive, or private information into a third-party AI model, effectively creating a data breach risk. This uncontrolled use of AI tools, often by employees who are unaware of the security implications, is the essence of Shadow AI. It’s a hidden layer of AI adoption that operates outside the visibility and control of an organisation’s IT and security teams.

It’s of note that the US Congress’ recently banned the use of Deepseek on all government-issued devices, this points a spotlight on shadow AI’s critical security concerns, 🔗 read more on the Reuters website.

Locking Down and Shadow AI

It’s tempting to cover off the potential risks involved with AI by implementing stringent lockdown measures, blocking access to all external AI tools. But… if employees find their legitimate needs for efficiency are stifled by internal restrictions, they may resort to using personal devices or unapproved applications outside the corporate network to access AI tools. This effectively pushes AI use further into the shadows, making it even harder to detect, monitor, and mitigate.

This is the dilemma for organisations: how to balance the need for innovation and employee empowerment with robust data security. Our article, read now, covers how CISOs placing an outright ban on the use of AI can drive employees to use their own devices, here we explore how the explosive growth of AI platforms can lead to systems being used that are not subject to proper security vetting processes.

How does AI pose a cybersecurity threat?

It’s important to recognise that AI isn’t just a tool employees might misuse; it’s also a powerful new weapon in the arsenal of cyber attackers. Malicious actors are increasingly leveraging AI and machine learning to:

- Enhance Phishing Attacks: AI can generate highly convincing and personalised phishing emails, making them harder to detect.

- Automate Malware Development: AI can assist in creating more sophisticated and evasive malware.

- Identify Vulnerabilities: AI can be used to scan networks and applications for weaknesses at an unprecedented speed.

- Automate Reconnaissance: AI can quickly gather vast amounts of public information about targets to inform attacks.

The threat landscape is continuously evolving, and ignoring the AI dimension leaves organisations vulnerable to a new generation of sophisticated cyber threats.

Is your security posture built for prompt-based threats?

Can your SIEM throw up an alert on a user asking an LLM to summarise sensitive information? How can it possibly cover employees using their own phones and devices? Often this potential breach can often be left with no alert and no trace.

How do organisations mitigate the risks posed by misuse of AI?

Addressing Shadow AI and the broader spectrum of AI-related cyber risks requires a multifaceted strategy that goes beyond simple bans.

Education and Awareness

The cornerstone of any effective cybersecurity strategy is informed employees. Regular training sessions should highlight the risks associated with using unapproved AI tools, explain data privacy implications, and provide clear guidelines on acceptable use. Employees need to understand why certain tools are restricted and the potential impact of their actions.

Visibility and Discovery

Organisations need robust tools and processes to identify where AI is being used across their networks. This involves monitoring network traffic, application usage, and data flows to uncover instances of Shadow AI.

Policy and Governance

Develop clear, comprehensive policies regarding the use of AI tools, both approved and unapproved. These policies should outline data handling protocols, acceptable data types for AI processing, and consequences for non-compliance.

Secure and Approved AI Solutions

Instead of outright bans, consider providing employees with secure, organisation-approved AI tools and platforms. These solutions can offer the efficiency employees seek while maintaining critical security controls and data governance.

Data Loss Prevention (DLP)

Implement and configure DLP solutions to prevent sensitive data from leaving the corporate network or being uploaded to unapproved external services, including GenAI platforms.

Establishing a Culture of Security

Foster an environment where employees feel empowered to report suspicious activity or accidental missteps without fear of reprisal. A culture of open communication about security enhances overall resilience.

ISO 42001

For organisations committed to demonstrating the highest levels of AI governance and risk management, pursuing ISO 42001 certification (Information technology – Artificial intelligence – Management system) is a strategic move. This international standard provides a framework for establishing, implementing, maintaining, and continually improving an AI management system. It directly addresses many of the challenges posed by Shadow AI by focusing on:

| Responsible AI Development and Use | Ensuring AI systems are designed and used ethically and securely. |

| Risk Assessment and Treatment | Identifying and mitigating AI-specific risks, including data breaches. |

| Data Governance | Establishing clear controls over data used by and generated by AI. |

| Transparency and Accountability | Promoting clear processes and responsibilities for AI use. |

Your Guide Through the AI Cyber Landscape

Navigating the complexities of Shadow AI and broader AI cyber threats can be daunting. At Mondas, we bring a wealth of experience and cutting-edge knowledge to help organisations like yours understand, mitigate, and manage these emerging risks.

Our team is adept at implementing general protective measures and can guide you on the path to achieving ISO 42001 certification, ensuring your AI strategy is secure, compliant, and future-proof. Don’t let the shadows of AI obscure your organisation’s potential; let us work on your path to secure innovation, contact us.

This article was authored by George Eastman, our Sales and Marketing Manager at Mondas, who has a wealth of experience in working with organisations on their overall cyber security protection, 🔗learn more about George on LinkedIn.

Last Updated 05/11/2025.