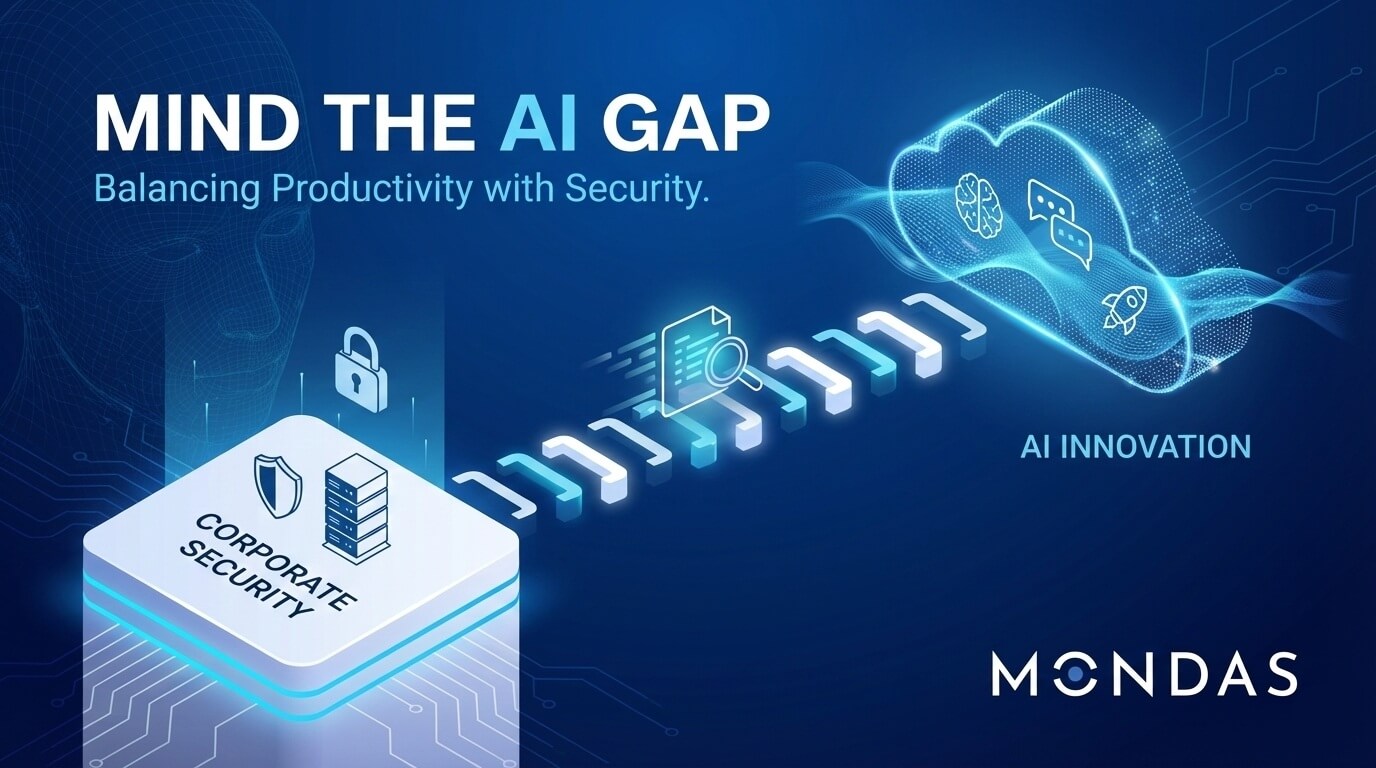

For IT Managers and CISOs, the conversation has shifted from “What is this?” to “How do we control this?”. We are well past the hype phase of Artificial Intelligence.

There is an undeniable boost from AI available to organisations in productivity gains like coding, content generation, data synthesis and much more. But does this widen the gap between operational reality and security posture?

At Mondas we work daily to understand the risks in AI being such an invaluable accelerator for business but bringing growing risks. We are recommending security leaders move beyond reactive fire-fighting and conduct a formal Gap Analysis.

In this article we look at how to assess your current standing and bridge the divide between productivity and protection.

Defining the AI Gap

In traditional cybersecurity, a gap analysis compares your current security controls against a standard (like ISO 27001 or NIST). In the AI era, that gap is more dynamic. It represents the distance between Shadow AI usage (what your staff are actually doing) and Governance Reality (what you think they are doing).

A 2026 outlook suggests that for many enterprises, this gap is where data leakage, IP theft, and compliance breaches are most likely to occur.

Step 1: Shadow AI

You can’t secure what you can’t see. The first step of your gap analysis isn’t policy writing; it’s discovery. Employees, eager to clear their inboxes or debug code, often bypass approved toolsets.

| Action | Audit your network traffic and CASB (Cloud Access Security Broker) logs for traffic to known LLM endpoints (OpenAI, Anthropic, Midjourney, etc.). |

| Question | Are we seeing traffic to AI tools that we haven’t vetted? If the answer’s yes, you have an immediate visibility gap. |

Step 2: Data Flow Assessment

Once you know where the data is going, you must ask what is leaving the building. The most critical gap often lies in Data Loss Prevention (DLP) rules that are outdated for AI contexts.

Traditional DLP might stop a credit card number from being emailed, but does it stop a proprietary code snippet or a confidential meeting transcript from being pasted into a public chatbot for that summary delivered in seconds?

| Action | Review your data classification tags. Do you have a specific category for AI-Restricted data? |

| Risk | Model Training is the new data breach. If your proprietary data is used to train a public model, it’s effectively leaked. |

Step 3: The Human Firewall Skill Gap

Technology is only half the equation, your team makes up the other half. There’s often a skills gap where employees treat AI agents like colleagues rather than the software tool it really is.

This anthropomorphism leads to lowered guardrails. Staff might share sensitive HR issues or financial projections with an AI because it feels conversational and private. It is neither.

| Action | Assess your current training modules. Do they specifically cover Prompt Injection awareness and Data Sanitisation before using AI tools? |

| Goal | Move your culture from blocking AI (which teams might ignore resulting in Shadow AI) to a policy of how we use AI without leaking the crown jewels. |

Step 4: The Tooling Gap

Finally, assess if your current security stack is AI-ready.

| Identity Management | Can you enforce MFA or SSO specifically for AI applications? |

| Vulnerability Scanning | Do your current scanners detect vulnerabilities in AI-generated code before it hits production? |

| Monitoring | Can you detect anomalous spikes in API usage that might indicate an “agentic” AI gone rogue or a credential stuff attack? |

Bridging the Gap

The AI boost conundrum is solvable, but it requires a shift in strategy. It’s not about stifling innovation; it’s about wrapping that innovation in a secure architecture.

At Mondas, we aim to help organisations conduct gap analyses that look beyond the firewall, assessing the specific risks posed by LLMs and generative tools.

By combining best-in-class software with expert-led consultancy, we ensure your productivity boost doesn’t come with a security penalty.

Ready to measure your AI Gap? Contact Mondas today for a preliminary consultation. Want to learn more about ISO 412001 – the certification specifically built around AI? Click here to find out more.

This article was brought to you by Lance Nevill, our Cyber Security Director at Mondas. Lance works with organisations towards their compliance with a focus on ISO 42001 in this context, learn more about Lance on 🔗LinkedIn here.

To read more about navigating the issue of AI in corporations, standards such as the 🔗NCSC’s Guidelines for Secure AI or the 🔗NIST AI Risk Management Framework are really useful resources.