In the last year, the conversation around Artificial Intelligence has been dominated by productivity: how it streamlines coding, automates copy, and accelerates research. Yet, in the shadow of this innovation, a parallel ecosystem has emerged. It’s the dark reflection of the tools we use daily, a suite of “Offensive AI” platforms designed specifically to weaponise the efficiency of Large Language Models (LLMs) for cybercrime.

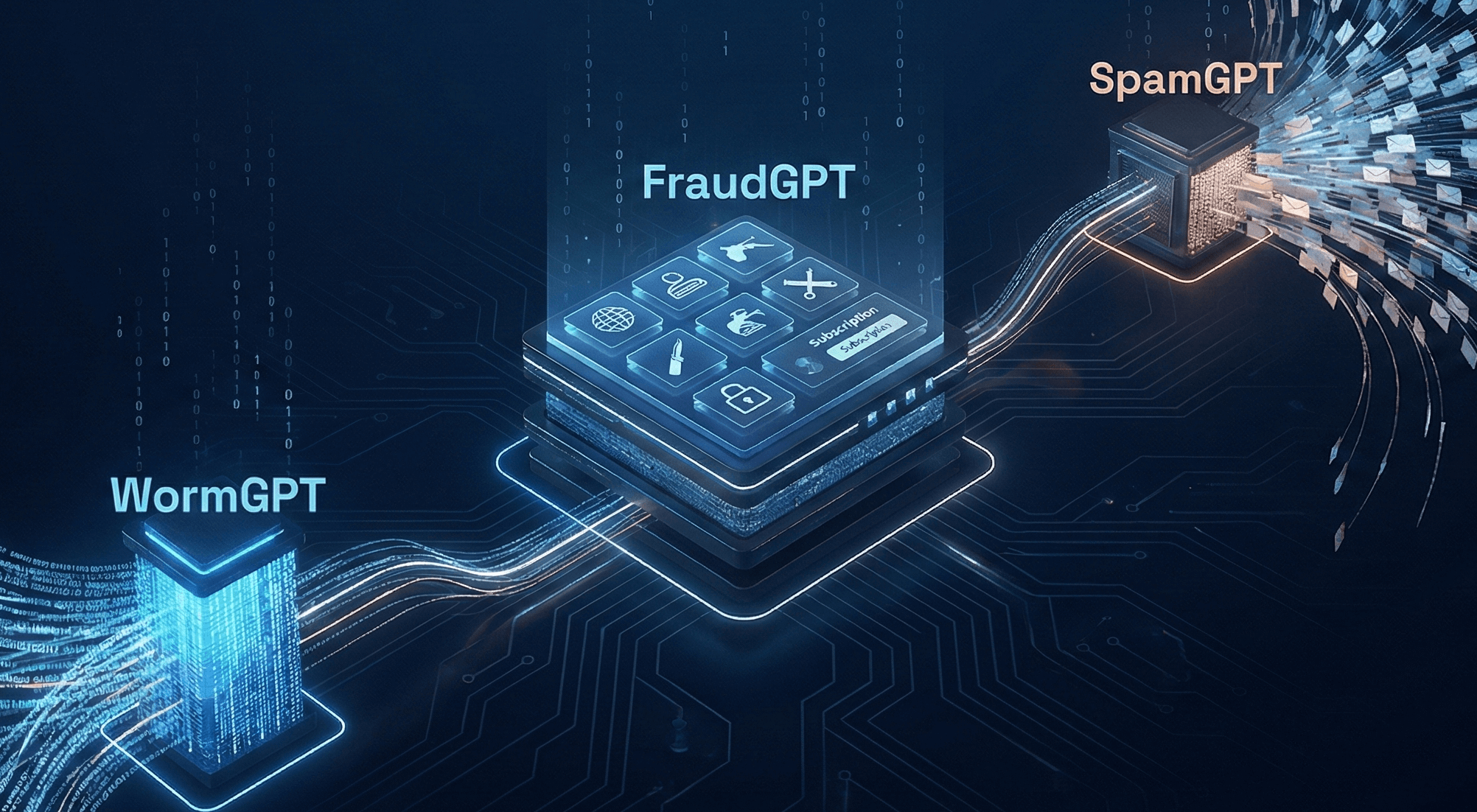

At Mondas, we believe in mastering the “Brilliant Basics” of security. But to defend effectively, we need to understand the offensive capability of the adversary. The barrier to entry for sophisticated cyberattacks has effectively collapsed, driven by three specific tools that are reshaping the threat landscape: WormGPT, FraudGPT, and SpamGPT.

What is WormGPT?

Built on the GPT-J open-source architecture, WormGPT was trained specifically on data related to malware creation and phishing. Its primary selling point is the total absence of “ethical guardrails.” When a threat actor asks ChatGPT to write a phishing email, it refuses. When they ask WormGPT, it obliges with terrifying competence.

If ChatGPT is the helpful assistant that refuses to answer unethical queries, WormGPT is its amoral twin.

The Threat:

Perfect Syntax |

It eliminates the poor grammar and spelling errors that were once the hallmarks of phishing attempts. |

Business Email Compromise (BEC) |

It excels at drafting highly personalised, urgent messages mimicking C-level executives. It can digest a CEO’s public speaking style and replicate their tone to authorize fraudulent transfers. |

Malware Generation |

It lowers the technical bar, allowing novices to generate malicious Python scripts or obfuscated code. |

What is FraudGPT?

Sold on dark web forums and Telegram channels for a monthly fee, FraudGPT offers an all-in-one suite for fraudsters. It doesn’t just write text; it provides the infrastructure for theft. It democratises access to sophisticated cyber capabilities, meaning an attacker no longer needs to be a master coder, they just need a subscription.

Where WormGPT is a tool, FraudGPT is a marketplace. It represents the subscription model applied to criminality, often dubbed “Hacking-as-a-Service” (🔗HaaS).

The Capabilities:

- Scam Landing Pages: It can generate convincing facsimiles of banking portals or login pages for major services (Microsoft 365, Google Workspace) in seconds.

- Carding & Fraud: It assists in writing code to identify leaks and vulnerabilities in credit card processing.

- Undetectable Code: It claims to write malicious code that can bypass traditional antivirus solutions.

What is SpamGPT?

Imagine a high-end CRM used by top marketing agencies to A/B test subject lines and optimise open rates. SpamGPT offers this exact functionality to criminals. It is designed to overwhelm defenses not just through volume, but through “smart” volume.

Perhaps the most insidious of the three, SpamGPT applies the rigour of legitimate marketing automation to phishing campaigns.

The Mechanism:

- A/B Testing Scams: It allows attackers to send small batches of different phishing variants to see which one bypasses filters and gets the most clicks. Once a “winner” is identified, it scales that specific message.

- Evasion: It is built to circumvent standard spam filters and anomaly detection by constantly tweaking message headers and content to appear legitimate.

- Volume: It automates the delivery of these messages at a scale that can paralyse standard email gateways.

The Shift from “Breach” to “Identity”

The rise of these tools signals a fundamental shift in how organisations must view security. We are moving away from an era where “blocking the IP address” was sufficient. When the attack is written in perfect English, comes from a compromised legitimate account, and is optimised by AI to bypass filters, the human firewall becomes your last line of defence.

What This Means for UK Business Leaders:

- Skepticism is a Security Asset: The old indicators of a scam (typos, weird formatting) are gone. Staff must be trained to question the context of a request, not just its appearance.

- Verify Out-of-Band: If an email from the CEO asks for an urgent transfer, verify it via a secondary channel (phone call, Slack, or Teams).

- Embrace Defensive AI: As attackers use AI to speed up attacks, defenders must use AI to speed up detection. This is the new arms race.

At Mondas, we monitor these developments not to spread fear, but to inform strategy. These tools are powerful, but they aren’t magic. They still rely on the human element in your team making a mistake. By fortifying your culture and ensuring your technical “basics” are robust, you can withstand even the most intelligent of storms.

Talk to us today to discover if we can support protecting the integrity of your data and digital ecosystem.

This article was brought to you by Lance Nevill, our Cyber Security Director at Mondas. Lance works with organisations towards their compliance with a focus on ISO 42001 or ISO 27001 in this context, learn more about Lance on LinkedIn here.

Further reading here 🔗NCSC: AI and Cyber Security – What You Need to Know.