We explore steps to stay cyber resilient in an AI-driven world.

The integration of Artificial Intelligence (AI) and Machine Learning (ML) into core business functions is a competitive imperative. From automated decision-making to predictive analytics, these Intelligent Systems are driving unprecedented efficiency and innovation. Yet, this rapid digital evolution introduces a growing challenge: a significantly expanded and increasingly complex attack surface.

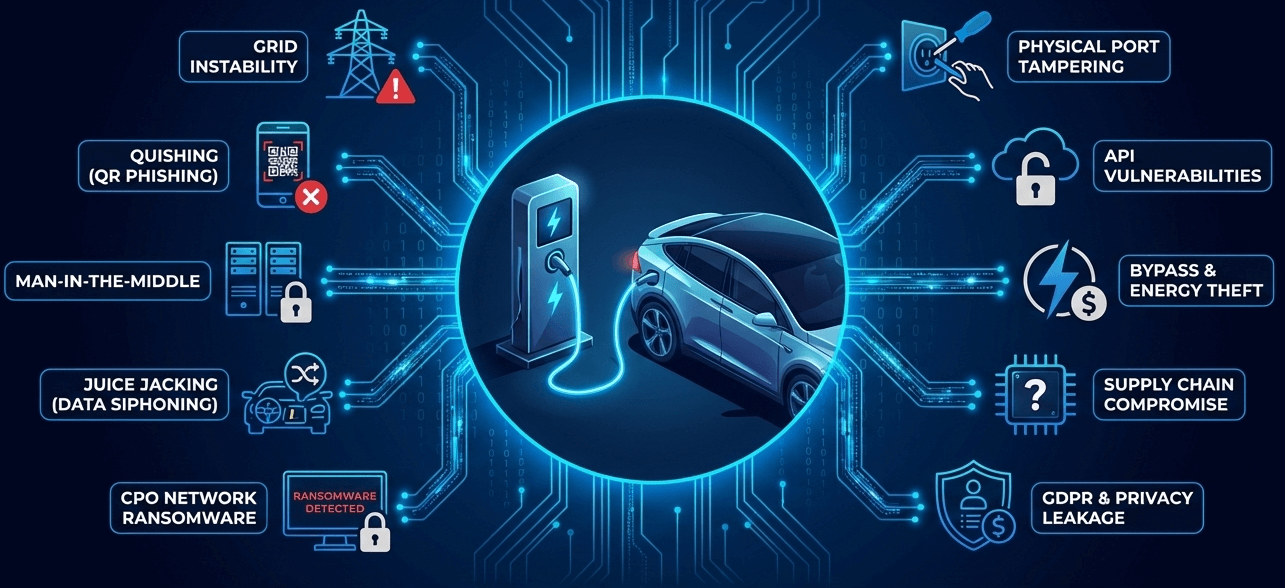

The modern cyber threat landscape is a battle of intelligence. Threat actors are leveraging the very same AI tools to scale their attacks, automate reconnaissance, and craft highly sophisticated scams like deepfake-driven social engineering and advanced phishing campaigns. The traditional security perimeter, focused solely on network boundaries, is effectively obsolete. Organisations must recognise that innovation inherently creates new security risks and demand a sophisticated, next-generation response.

A Holistic and Proactive Approach

To meet the evolving attack surface head-on, organisations must evolve and take a proactive, holistic approach that understands and mitigates AI-specific risks. This shift moves security beyond simply reacting to incidents and embeds resilience directly into the AI lifecycle.

1. AI-Specific Security Architecture: Security by Design

Security can no longer be an afterthought. It must be a foundational element, or ‘security by design,’ embedded into the entire AI/ML pipeline.

- Robust Data Validation: The foundation of any AI system is its training data. Malicious actors know this, making data poisoning a critical vector. Implementing stringent, continuous data validation is essential to detect and filter corrupted or adversarial inputs before they compromise the model’s integrity.

- Secure MLOps Practices: Establishing secure MLOps (Machine Learning Operations) ensures that all code, models, and infrastructure components are version-controlled, scanned for vulnerabilities, and deployed under strict, automated governance.

- Zero Trust Access Controls: For all data and model repositories, strict Zero Trust principles must be applied. Never assume an internal component or user is safe; verify every access request and permission explicitly.

2. Continuous Monitoring for Model Drift and Adversarial Attacks

Traditional security monitoring focuses on network traffic and endpoints. This is no longer sufficient. Organisations need systems that can monitor the integrity and behaviour of the AI model itself.

- Detecting Model Drift: AI models are not static. Changes in real-world data can cause model drift, leading to inaccurate or, worse, potentially dangerous outputs. Continuous behavioural monitoring is vital to detect sudden, unexpected changes in model outputs that could signal either operational failure or a subtle, malicious input.

- Identifying Adversarial Attacks: Attackers use subtle, malicious inputs designed to compromise the system’s output without detection to evade AI-powered controls. Defence mechanisms must include adversarial training to test and harden models against these specific, targeted manipulations.

3. Comprehensive Attack Surface Management (ASM)

The AI era introduces new assets like specialised AI infrastructure, external APIs, and cloud-native functions, massively expanding the threat landscape. A comprehensive view is non-negotiable.

- Real-time Asset Visibility: It is vital to have a full, real-time view of every digital asset including cloud functions, APIs, and the specialised AI infrastructure. Security teams cannot protect what they cannot see, and Shadow IT within the AI space is a significant risk. To achieve this level of visibility, organisations must adopt External Attack Surface Management (EASM) practices.

- Risk-Based Prioritisation: Tools and processes must be in place to identify, classify, and prioritise risks across this dynamic and massively expanded surface, ensuring critical and most-exploitable vulnerabilities are addressed first. This often involves leveraging platforms that provide an objective, real-time security rating (such as SecurityScorecard’s A-F grade) to measure exposure across all publicly-facing assets, providing a common language for measuring and communicating risk.

4. The Human Element

Technology is only one part of the solution. The expertise and guiding principles behind it are equally critical.

- Specialised Training: Invest in training data science and security teams on the unique threats to AI, such as model extraction and data inference attacks. Collaboration between these teams is crucial for a unified defence.

- Clear AI Governance: Establishing clear AI Governance frameworks ensures that ethical and security considerations are mandated throughout the entire AI lifecycle, from conception to retirement, establishing accountability for its outputs and security.

Resilient AI Adoption

At Mondas, we understand that AI is a competitive necessity, and we believe that security should enable, not inhibit, this innovation. Our expertise in advanced cyber resilience helps you harness the power of intelligent systems while maintaining an impenetrable defence.

- AI-Native Security Solutions: Tools that are specifically designed to protect models from poisoning, extraction, and adversarial inputs.

- Holistic Attack Surface Visibility: Comprehensive services that map and manage your dynamic environment, providing clarity across traditional IT and novel AI infrastructure.

- Strategic Consulting and Measurable Resilience: Our expert guidance helps you embed security into your AI development pipeline, ensuring compliance and operational integrity from the outset. This includes specialist consultation to help your organisation achieve and maintain a strong security rating through platforms like SecurityScorecard. A high, demonstrably consistent score is essential for managing Third-Party Risk (TPRM) within your AI supply chain and communicating security posture to leadership.

The exponential growth of the digital world, driven by AI, requires an exponential upgrade to your security posture. Don’t let the expanded attack surface compromise your future. Partner with Mondas to ensure your AI systems are not just intelligent, but demonstrably cyber resilient.