ISO 27001 has been the gold standard for Information Security Management Systems (ISMS) for a long time now. But organisations are deploying generative AI and machine learning tools so the specific risks associated with AI like bias, hallucinations, and lack of explainability all fall outside the traditional scope of pure information security.

We take a look at ISO 42001, the first global standard for an AI Management System (AIMS) and discover if organisations certified to ISO 27001 can integrate ISO 42001 without too much pain. Can your organisation harmonise these two standards to create a robust, future-proof governance structure?

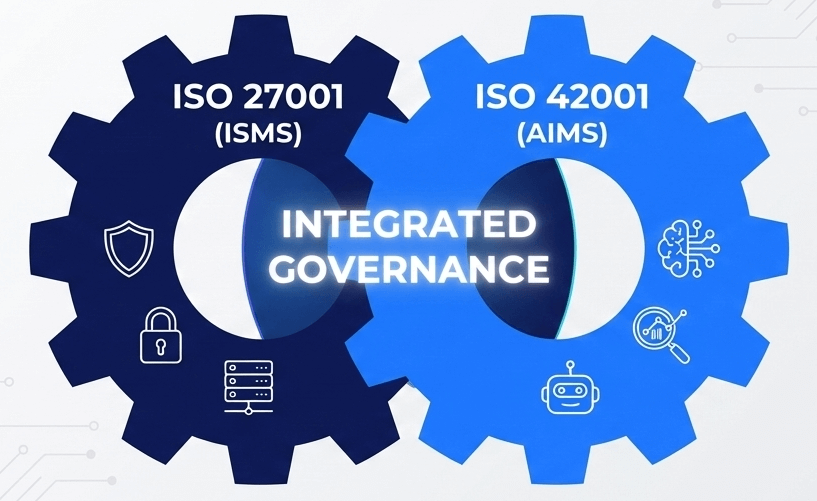

The case for harmonisation

Both ISO 27001 and ISO 42001 are built upon the Harmonised Structure (HS) (formerly High-Level Structure or Annex SL). This means they share identical core clauses (Context, Leadership, Planning, Support, Operation, Performance Evaluation, and Improvement).

A unified management system ensures that:

Duplication is minimised |

You avoid running parallel internal audits or management reviews. |

Risk is holistic |

You view security risks and AI safety risks through a single lens. |

Agility is increased |

Your governance framework can adapt to new AI tools faster without compromising security. |

Step 1: Context of the Organisation (Clause 4)

Your existing ISMS already defines your interested parties and scope. To integrate ISO 42001, you need to expand this context.

ISO 27001 asks, “What acts as a threat to the confidentiality, integrity, and availability of our information?”, ISO 42001 asks, “What acts as a threat to the responsible development and use of our AI systems?”

Action: Update your “Context of the Organisation” document to include AI-specific stakeholders (e.g., data subjects affected by automated decisions, regulators concerned with AI ethics) and define the boundaries of your AIMS.

Step 2: The Integrated Risk Assessment (Clause 6)

This is where the divergence is most critical. ISO 27001 risk assessments focus on information security risks. ISO 42001 introduces the concept of AI System Impact assessments.

One can’t rely on existing asset registers, there’s a need to assess the AI model lifecycle.

ISO 27001 Focus |

Is the training data secure? Is the model protected from poisoning attacks? |

ISO 42001 Focus |

Is the training data biased? Is the model transparent? Is there a risk of unintended outcomes? |

Action: Evolve your Risk Treatment Methodology to include AI-specific impact categories. Your risk acceptance criteria needs to now account for ethical risks, not just security breaches.

Step 3: Leveraging Annex A Controls

Organisations mature in ISO 27001 will be familiar with Annex A controls. ISO 42001 has its own Annex A, and there is significant synergy.

Data Quality |

Under ISO 27001, this is an integrity issue. Under ISO 42001, data quality is vital to prevent algorithmic bias. |

Supplier Relationships |

You likely already vet suppliers for security (ISO 27001). You must now extend this due diligence to assess how third-party AI providers manage their AI risks (ISO 42001). |

Step 4: Competence and Awareness (Clause 7)

Human error remains a primary vulnerability in cyber security. In the realm of AI, this vulnerability expands to include misuse of tools (e.g., inputting sensitive corporate data into public Large Language Models). We cover the 🔗risks of Shadow AI in our article here.

Your security awareness training needs to be updated. Employees need to understand not just how to keep passwords safe, but how to use AI tools responsibly.

Action: Integrate AI literacy into your regular security induction and refresher training. Ensure your data scientists and AI engineers have specific training on the ethical requirements of ISO 42001.

Step 5: Lifecycle Management (Operation – Clause 8)

ISO 27001 focuses heavily on the security of operations. ISO 42001 requires operational controls throughout the AI lifecycle: design, development, testing, deployment, and retirement.

You need to implement “guardrails” at the development stage. This aligns perfectly with the concept of Secure by Design, a principle Mondas advocates for in all software development.

A Unified Defence

The fears surrounding AI in uncontrolled data leakage and autonomous errors are valid, but manageable. The introduction of ISO 42001 provides the framework to manage these fears, while ISO 27001 keeps the fortress remains secure.

Integrating these standards is complex. There’s a need to understand where cyber security ends and AI governance begins. For organisations aiming to lead in their sector, a unified approach is the only way to deploy AI with confidence. Our team is standing by to support your journey to ISO 42001 compliance, read about our approach here or get in touch today.

This article was brought to you by Lance Nevill, our Cyber Security Director at Mondas. Lance works with organisations towards their compliance with a focus on ISO 42001 or ISO 27001 in this context, learn more about Lance on 🔗LinkedIn here.