In just a few short years, AI has gone from a fascinating but niche curiosity to a cornerstone of enterprise productivity. From developers streamlining code to marketers crafting copy, Generative AI tools are now deeply embedded in the way we work.

This rapid adoption has thrown a bit of a spanner in the works for data security. For Chief Information Security Officers (CISOs) and their boards, the volume of sensitive data potentially being fed into these AI models is staggering, and the risks of intellectual property leaks, data breaches, and compliance violations are very real.

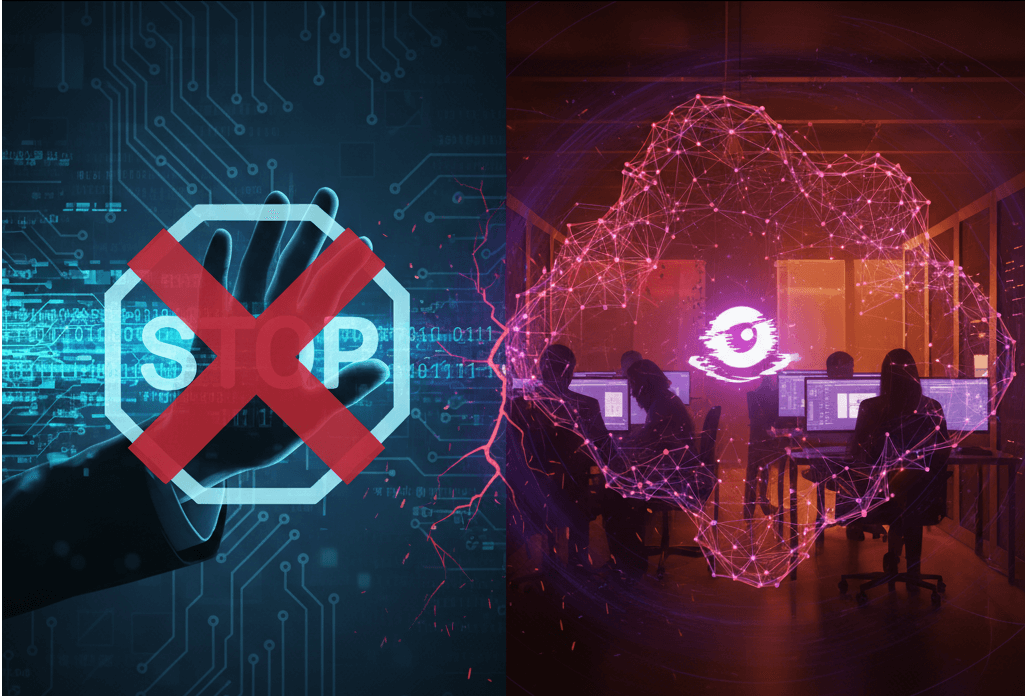

In a bid to manage this risk, a common first reaction is to mandate a complete ban on certain AI tools, like ChatGPT, across the corporate network. The thinking is straightforward: if you can’t control the risk, you eliminate the source.

But this seemingly simple solution often creates a new problem: Shadow AI.

The Paradox of the Ban

The issue is that employees grow accustomed to the productivity boost these tools provide. They’ve integrated them into their daily workflows, and they’ve seen the value firsthand. When a ban is announced, they face a dilemma: how do they do their jobs as efficiently without the AI assistance they’ve come to rely on?

The result is predictable, they find workarounds. They might use their personal devices on an unmanaged network, or they’ll access the tools on a company machine using a personal account. This bypasses all the carefully crafted corporate security controls, creating an entirely unmanaged and unmonitored security risk. Data that would have been protected by corporate firewalls and monitoring tools is now being handled in a completely unprotected environment.

Nuanced Enforcement

A more sustainable and effective strategy is one of nuanced enforcement. Instead of banning these powerful tools outright, organisations should aim to become an enabler of safe AI usage. The aim is to permit AI in sanctioned contexts while intelligently intercepting risky behaviours in real time.

This approach requires a combination of three key elements:

- Policy & Education: Create a clear, easy-to-understand policy that defines what constitutes appropriate and inappropriate use of AI tools. This should be backed by continuous training that teaches employees the importance of using sanctioned, secure enterprise tools and highlights the dangers of using personal accounts or devices for company work.

- Technical Controls: Implement robust security solutions. This includes data loss prevention (DLP) systems that can recognise and block sensitive information from being entered into AI prompts, even on approved tools. It also involves network monitoring to identify and flag attempts to access unsanctioned applications.

- Monitoring & Visibility: Security teams need a clear picture of how AI is being used across the organisation. This visibility allows them to identify instances of Shadow AI and take targeted, corrective action, rather than resorting to a blunt, company-wide ban.

By taking this more measured approach, security teams can transition from being the “Department of No” to a vital business partner. They provide a secure framework that allows employees to leverage the power of AI safely, ensuring that the organisation reaps the rewards of innovation without exposing itself to unnecessary risk.

The AI revolution isn’t slowing down so the most effective strategy may be to guide its incredible power safely and securely.

ISO 42001 Certification

Mondas can act as a partner for organisations navigating the complexities of ISO 42001 certification. This standard, which focuses on an AI Management System (AIMS), is the world’s first to provide a framework for the responsible and ethical use of AI. The first step is often to conduct a Gap Analysis, assessing a company’s current practices against the ISO 42001 and identifying areas for improvement. Our Farnborough-based team can then develop a tailored implementation plan.

Mondas provides consultancy and support to address skills gaps, offering unbiased internal auditing expertise and helping to finalise preparations for the formal certification audit.

We can also integrate with or implement compliance platforms like Vanta, which supports a wide range of frameworks including ISO 42001, to automate controls monitoring and evidence gathering.

This holistic support helps organisations not only achieve certification but also build a robust, ongoing framework to prevent AI misuse and the resulting security breaches. Ultimately, Mondas helps to create a secure, transparent, and auditable environment for AI, ensuring that data is protected and that the use of these powerful tools is both compliant and trustworthy. Get in touch today to discuss more with the Mondas team.

Published 03/10/2025