We’re seeing a shift in the boardrooms of the UK. The initial excitement of Generative AI is being tempered by valid fears: Where is our data going? Who is making the decisions? Is our AI biased?

The rapid adoption of AI has often outpaced the governance required to manage it. This is where ISO 42001 could step in. Like ISO 27001 became the gold standard for information security, ISO 42001 is rapidly becoming the benchmark for responsible AI. It’s the world’s first international standard for an Artificial Intelligence Management System (AIMS).

At Mondas, we believe that true security is not just about locking doors; it’s about understanding who (or what) is in the room. Our guide outlines the practical journey from zero to hero – from an unmanaged AI landscape to a fully certified robust AIMS.

The “Zero” State: Identifying the Gap

Before getting into certification, we need to address the “Zero” state. In this phase, organisations often suffer from “Shadow AI”. Departments may be using public Large Language Models (LLMs) to summarise sensitive contracts, or developers might be using AI coding assistants without oversight.

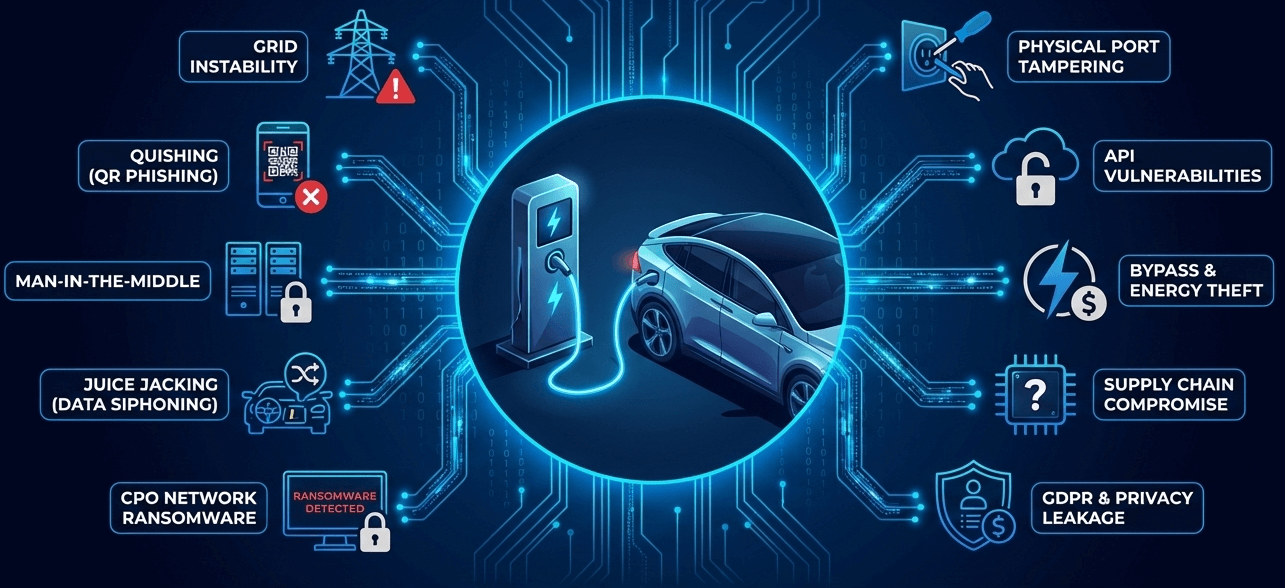

The risks here aren’t just traditional security breaches, they now include:

Black Box Decision Making |

You can’t explain why an AI rejected a loan or a candidate. |

Model Drift |

An AI system that was safe yesterday starts behaving unpredictably today. |

Bias and Ethics |

Unintentional discrimination buried in training data. |

Moving from this chaotic state to a structured AIMS requires a deliberate, step-by-step approach.

Phase 1: Context and Scoping (The “Who and What”)

You can’t govern what you can’t see. The first practical step is establishing the Context of the Organisation (Clause 4).

ISO 27001, which focuses on data assets but ISO 42001 requires you to catalogue intelligence assets. You need to define your role: are you an AI developer (creating models) or an AI deployer (using existing tools)?

Actionable Steps:

1. The AI Inventory |

Audit every tool in your business. From the chatbot on your website to the predictive analytics in your finance department. |

2. Define Scope |

You don’t need to certify the entire organisation straight away. You might start your AIMS scope with your most high-risk AI system (e.g., a customer-facing recommendation engine) and expand later. |

3. Leadership Commitment |

AI governance can’t be a bottom-up initiative. It needs top-level buy-in to enforce policies on ethical use. |

Phase 2: The AI Risk Assessment (The “Why”)

This is where ISO 42001 diverges significantly from other standards. A standard cyber risk assessment looks at confidentiality, integrity, and availability. An AI Risk Assessment also looks at safety, fairness, and transparency. This can mean asking difficult questions:

- What is the impact on the individual if this AI makes a mistake?

- Is the training data representative of our diverse user base?

- Can we override the system if it goes rogue?

Mondas Insight: This is where best-in-class tooling becomes essential. Manual spreadsheets are just not good enough to track the probabilistic risks of AI. You need dynamic risk registers that can adapt as models evolve.

Phase 3: Implementing the Controls (Annex A)

Once risks are identified, you need to treat them using the controls listed in Annex A of the standard. These are the practical “muscles” of your AIMS.

Key control areas include:

Data Quality (A.8) |

Ensuring the data feeding your AI is clean, legally sourced, and unbiased. |

Human Oversight |

Establishing “human-in-the-loop” protocols for critical decisions. |

Transparency |

Mechanisms to inform users they are interacting with an AI. |

The Role of Continuous Monitoring

In traditional software, you write code, test it, and deploy it. In AI, the system learns and changes. Therefore, ISO 42001 places a heavy emphasis on Continuous Monitoring. You must have software tools in place that alert you if a model’s accuracy dips or if it begins to hallucinate.

Phase 4: Competence and Culture

Your best firewall is your people. ISO 42001 (Clause 7) mandates that anyone interacting with AI systems must be competent. This involves:

- Training staff on the Ethical AI Policy.

- Ensuring developers understand Secure by Design principles for machine learning.

- Creating a culture where employees feel safe reporting “weird” AI behaviour without fear of blame.

Phase 5: The Audit and Certification

The final step is the independent validation.

1. Internal Audit |

A dry run to identify gaps. |

2. Stage 1 Audit (Document Review) |

The auditor checks if your AIMS is designed correctly. Do you have the right policies? Is the scope defined? |

3. Stage 2 Audit (Implementation) |

The auditor checks if the AIMS is working. They will look for evidence of risk assessments, logs of model monitoring, and records of training. |

Trust as a Competitive Advantage

Achieving ISO 42001 certification isn’t just a compliance exercise; it’s a declaration of trust. In an era where deepfakes and data misuse are dominating the headlines, being able to prove that your AI is managed, ethical, and secure is a powerful differentiator.

At Mondas, we combine deep expertise in information security with the latest AI governance tools to help you navigate this journey. Whether you are at “Zero” or ready to formalise your processes, the path to AIMS is clearer than you think. Contact us today to discover how we could support your journey to ISO 42001 certification.

This article was brought to you by Michelle Claridge, our Head of Governance Risk and Compliance at Mondas. Michelle is a GRC expert who has brought AI specific security measures to organisations on their journey to ISO 42001, learn more about Michelle on 🔗LinkedIn here.

Find out more about the ISO standard here: https://www.iso.org/standard/42001. Learn more from the 🔗National Cyber Security Centre (NCSC) here.